I looked into why this is so. The answer to your question is likely that the max limit is being set by one of the underlying processes. Here is what I found:

The max_hosts setting can be set via the /etc/openvas/openvas.conf file according to the manual. However, openvas-scanner also checks the gvm-libs module using the get_prefs() → prefs_init() function.

In that function, the max_hosts variable has a default of 30.

In several places in the openvas-scanner, such as: openvas.c/attack_network_init(), the configuration settings are loaded in this order:

set_default_openvas_prefs (); //Set the prefs from the openvas_defaults array, but this is hardcoded, not the config file, and does not include scanner settings.prefs_config (config_file); // Apply the configs from given file as preferences. This would allow the /etc/openvas/openvas.conf file to set the max_hostsset_globals_from_preferences (); // However, here the config file settings are overridden with the gvm-libs defaults found here, which are hardcoded.

So, it seems that the hardcoded gvm-libs setting is limiting you from setting higher than 30.

So, I don’t think a setting such as max_hosts = 50 in the /etc/openvas/openvas.conf configuration file, will supercede this hardcoded limit in the gvm-libs/base/prefs.c file.

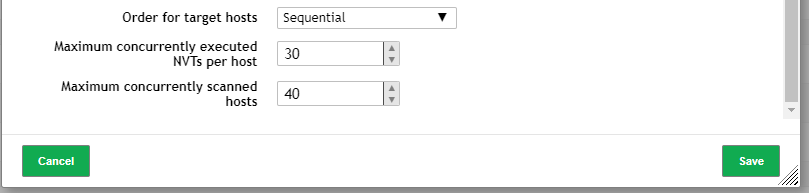

Also, as far as I can tell, there is no way to set a global preference via the web-interface, only on the per-scan limit which (again as far as I can tell) does not override the hardcoded values in gvm-libs/base/prefs.c.

IMHO, this could be changed to allow the openvas.conf file to override the gvm-libs default setting. By putting the prefs_config (config_file); function after the set_globals_from_preferences ();

Also, although gvmd does allow a startup flag to --modify-setting, I don’t think the max_hosts is available in the database to alter in this way.

Furthermore, another configuration option, the max_sysload setting might help you if you can find a way to overcome the global max_hosts setting. Combined with a very high max_hosts value, setting max_sysload = 90 or maybe higher  would allow you to max your CPU bandwidth.

would allow you to max your CPU bandwidth.